Plugin / WordPress Robots.txt optimization (+ Sitemap) – Website traffic, ranking & SEO Booster + Woocommerce

PagupFrequently Asked Questions (FAQ)

Ratings

Rating breakdown

Details Information

Version

First Released

Total Downloads

Wordpress Version

Tested up to:

Require PHP Version:

Tags

Contributors

Languages

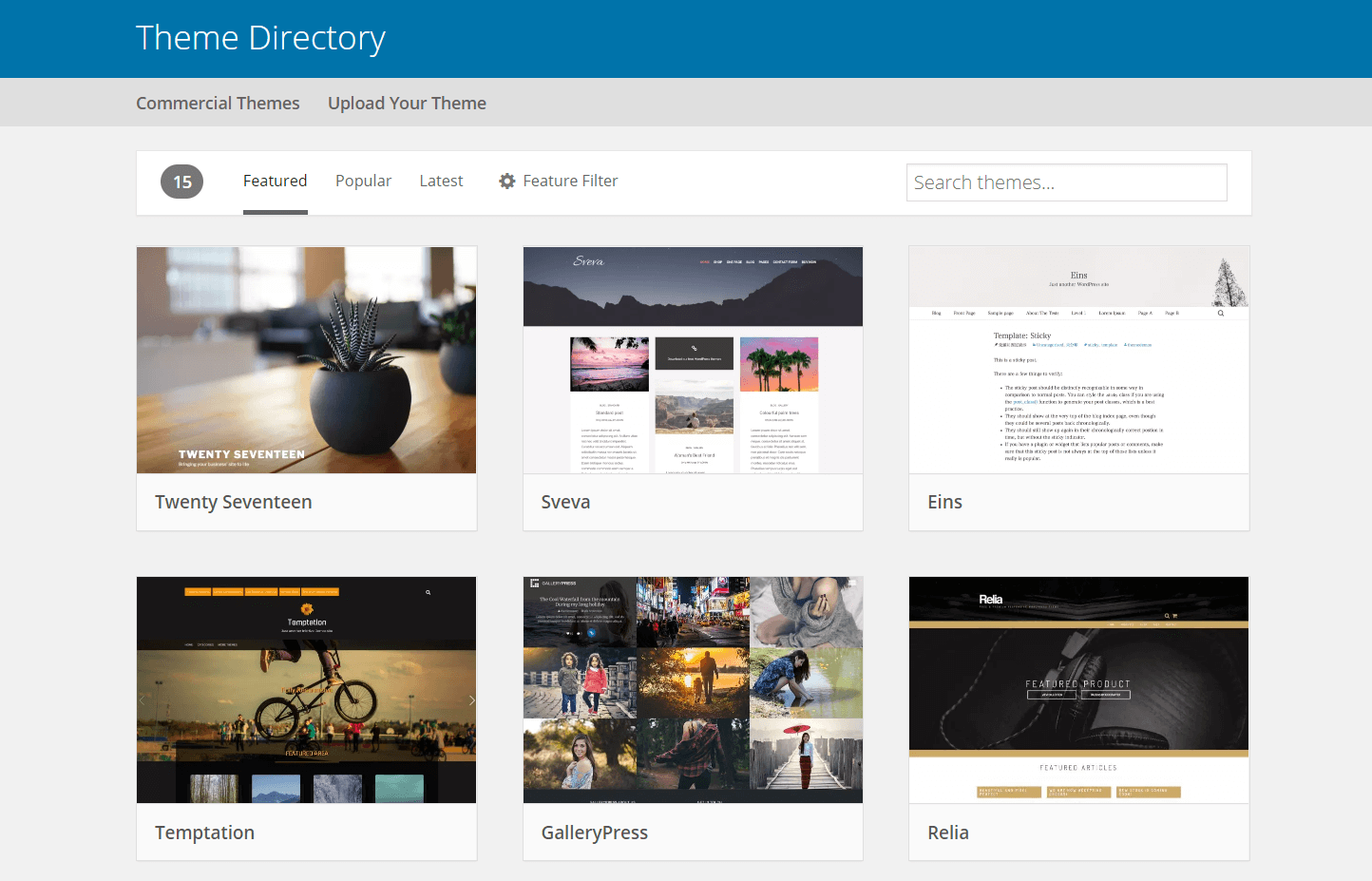

DIRECTORY DISCLAIMER

The information provided in this THEME/PLUGIN DIRECTORY is made available for information purposes only, and intended to serve as a resource to enable visitors to select a relevant theme or plugin. wpSocket gives no warranty of any kind, express or implied with regard to the information, including without limitation any warranty that the particular theme or plugin that you select is qualified on your situation.

The information in the individual theme or plugin displayed in the Directory is provided by the owners and contributors themselves. wpSocket gives no warranty as to the accuracy of the information and will not be liable to you for any loss or damage suffered by you as a consequence of your reliance on the information.

Links to respective sites are offered to assist in accessing additional information. The links may be outdated or broken. Connect to outside sites at your own risk. The Theme/Plugin Directory does not endorse the content or accuracy of any listing or external website.

While information is made available, no guarantee is given that the details provided are correct, complete or up-to-date.

wpSocket is not related to the theme or plugin, and also not responsible and expressly disclaims all liability for, damages of any kind, arising out of the use, reference to, or reliance on, any information or business listed throughout our site.